As 2023 unfolds, the misuse of AI in cybercrime has alarmingly escalated. The year’s first half saw over 2,200 victims ensnared by 48 ransomware groups, with Lockbit marking a notable 20% increase in victims from the previous year.

This uptick in cybercrime is not just about numbers; it reflects a change in tactics and targets. The U.S. bore the brunt, hosting 45% of these attacks, while a notable surge was seen in Russia, with “MalasLocker” replacing ransom demands with charitable donation requests.

This blog post will explore the top seven ethical issues AI and privacy face in business environments. We aim to unpack the complexities surrounding AI privacy and the intersection of privacy and security in AI systems, offering practical solutions to navigate this intricate landscape.

Our discussion will not only emphasize the importance of protecting personal data but also highlight how maintaining privacy is crucial for individual autonomy and dignity in our increasingly AI-driven world.

Table of Content

- What Are the Privacy Concerns Regarding AI?

- Top 7 AI Privacy Risks Faced by Businesses

- How Can Businesses Solve AI Privacy Issues?

- Benefits of Addressing AI and Privacy Issues

- Kanerika: The Leading AI Implementation Partner in USA

- FAQs

What Are the Privacy Concerns Regarding AI?

Artificial Intelligence (AI) is set to make a colossal impact globally, with PWC predicting a $15.7 trillion contribution to the world economy by 2030. This includes a potential 26% boost in local GDPs and involves around 300 identified AI use cases. While AI promises significant productivity enhancements and consumer demand stimulation, it also brings forth pronounced AI and privacy issues.

The main concern lies in AI’s growing involvement with personal data, escalating risks of data breaches and misuse. The misuse of generative AI, for instance, can lead to the creation of fake profiles or manipulated images, highlighting privacy and security issues. As Harsha Solanki of Infobip notes, 80% of businesses globally grapple with cybercrime due to improper handling of personal data. This scenario underscores the urgent need for effective measures to protect customer information and ensure AI’s ethical use.

What Type of Data Do AI Tools Collect?

In 2020, over 500 healthcare providers were hit by ransomware attacks, highlighting the urgent need for robust data protection, especially in sensitive sectors like healthcare and banking. These industries, governed by strict data privacy laws, face significant risks when it comes to the type of data collected by AI tools.

AI tools gather a wide range of data, including Personal Identifiable Information (PII). Defined by the U.S. Department of Labor, PII can identify an individual either directly or indirectly and includes details like names, addresses, emails, and birthdates. AI collects this information in various ways, from direct inputs by customers to more covert methods like facial recognition, often without the individuals’ awareness.

This surreptitious collection of data poses substantial privacy concerns. For instance, healthcare providers, bound by HIPAA regulations, must safeguard patient data while providing medical care. The ransomware attacks in 2020 underscore the vulnerability of these sectors to data breaches, which not only have financial repercussions but also compromise patient privacy and trust.

AI Privacy Laws Around the World

The landscape of AI privacy and data laws is varied, with significant developments in the European Union, the United States, and other countries. The EU’s “AI Act” and the GDPR set a comprehensive framework for AI, categorizing systems based on risk and emphasizing data protection. This approach underscores the EU’s commitment to ethical AI usage.

In contrast, the U.S. lacks a unified federal AI law, leaning on sector-specific regulations and state laws like California’s CCPA, which focuses on consumer privacy in AI.

Other countries are also shaping their AI legal landscapes. Canada’s Artificial Intelligence and Data Act complements its existing privacy laws, while the UK focuses on AI ethics guidelines and data protection through the ICO.

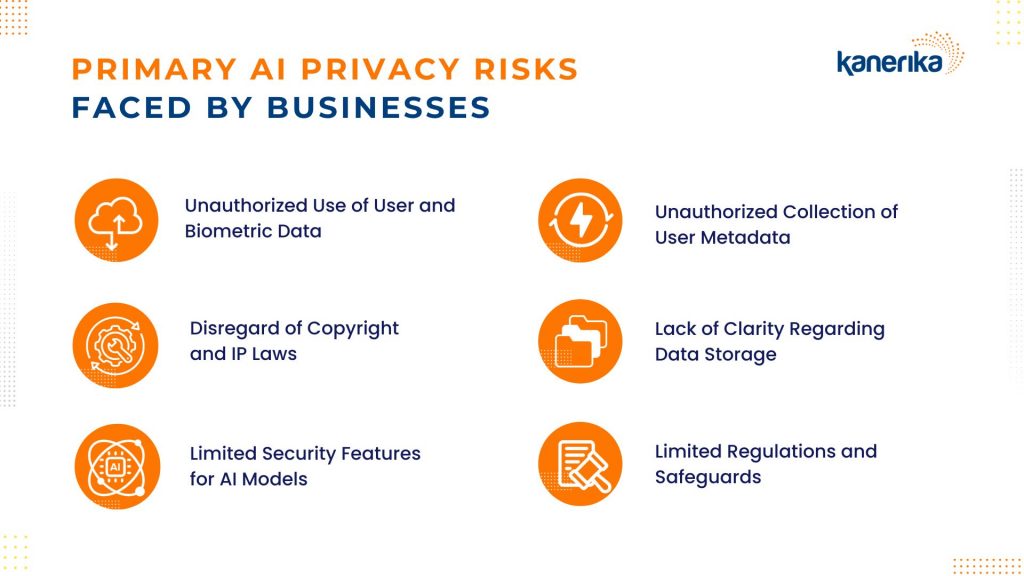

Top 7 AI Privacy Risks Faced by Businesses

1. Unauthorized Use of User Data

One of the major AI privacy risks for businesses is the unauthorized use of user data. A notable instance is Apple’s restriction on its employees using AI tools like OpenAI’s ChatGPT, driven by concerns about confidential data leakage. This decision, highlighted in reports by The Wall Street Journal, reflects the potential risk of sensitive user data becoming part of an AI model’s future training dataset without explicit consent.

OpenAI, which typically stores interactions with ChatGPT, introduced a feature to disable chat history following privacy violation concerns. However, the data is still retained for 30 days, posing a risk of unintentional exposure. Such practices can lead to legal issues under regulations like GDPR, not to mention ethical dilemmas, privacy breaches, significant fines, and damage to the company’s reputation. This scenario highlights the importance of ensuring that user data input into AI systems is managed ethically and legally, maintaining customer trust and compliance.

2. Disregard of Copyright and IP Laws

A significant AI privacy risk for businesses is the disregard for copyright and intellectual property (IP) laws. AI models frequently pull training data from diverse web sources, often using copyrighted material without proper authorization.

The US Copyright Office’s initiative to gather public comments on rules regarding generative AI’s use of copyrighted materials highlights the complexity of this issue. Major AI companies, including Meta, Google, Microsoft, and Adobe, are actively involved in this discussion.

For instance, Adobe, with its generative AI tools like the Firefly image generator, faces challenges in balancing innovation with copyright compliance. The company’s involvement in drafting an anti-impersonation bill and the Content Authenticity Initiative reflects the broader industry struggle to navigate the gray areas of AI and copyright law.

3. Unauthorized Use of Biometric Data

A significant AI privacy risk is the unauthorized use of biometric data. Worldcoin, an initiative backed by OpenAI founder Sam Altman, exemplifies this issue. The project uses a device called the Orb to collect iris scans, aiming to create a unique digital ID for individuals. This practice, particularly prevalent in developing countries, has sparked privacy concerns due to the potential misuse of collected biometric data and the lack of transparency in its handling. Despite assurances of data deletion post-scan, the creation of unique hashes for identification and reports of a black market for iris scans highlight the risks associated with such data collection

4. Limited Security Features for AI Models

Many AI models lack inherent cybersecurity features, posing significant risks, especially in handling Personal Identifiable Information (PII). The gap in security leaves these models open to unauthorized access and misuse. As AI continues to evolve, it introduces unique vulnerabilities. Essential components like MLOps pipelines, inference servers, and data lakes require robust protection against breaches, including compliance with standards like FIPS and PCI-DSS. Machine Learning models are attractive targets for adversarial attacks, risk financial losses, and user privacy breaches.

5. Unauthorized Collection of User Metadata

In July, Meta revealed Reel videos are growing popular among both users and advertisers using AI-powered algorithms. However, behind this success, the unauthorized collection of user metadata by AI technologies is a growing privacy concern. Meta’s Reels and TikTok exemplify how user interactions, including those with ads and videos, lead to metadata accumulation. This metadata, comprising search history and interests, is used for precise content targeting. AI’s role in this process has expanded the scope and efficiency of data collection, often occurring without explicit user awareness or consent.

6. Lack of Clarity Regarding Data Storage

Amazon Web Services (AWS) recently expanded its data storage capabilities, integrating services like Amazon Aurora PostgreSQL and Amazon RDS for MySQL with Amazon Redshift. This advancement allows for more efficient analysis of data across various databases. However, a broader issue in AI is the lack of transparency about data storage. Few AI vendors clearly disclose how long, where, and why they store user data. Even among transparent vendors, data is often stored for lengthy periods, raising privacy concerns about the management and security of this data over time.

7. Limited Regulations and Safeguards

The current state of AI regulation is characterized by limited safeguards and a lack of consistent global standards, posing privacy risks for businesses. A 2023 Deloitte study revealed a significant gap (56%) in understanding and implementing ethical guidelines for generative AI within organizations.

Internationally, regulatory efforts vary. Renowned Dutch diplomat Ed Kronenburg notes that the rapid advancements in AI, exemplified by tools like ChatGPT, necessitate swift regulatory action to keep pace with technological developments.

How Can Businesses Solve AI Privacy Issues?

Businesses today face the challenge of harnessing the power of AI while ensuring the privacy and security of their data. Addressing AI privacy issues requires a multi-faceted approach, focusing on policy, data management, and the ethical use of AI.

Establish an AI Policy for Your Organization

To tackle AI and privacy concerns in companies, establishing a robust AI policy is essential. This policy should clearly define the boundaries for the use of AI tools, particularly when handling sensitive data like PHI and payment information.

Such a policy is vital for organizations grappling with privacy and security issues in AI, ensuring that all personnel are aware of their responsibilities in maintaining data privacy and security. It’s a step towards addressing the ethical issues AI and privacy present, especially in large and security-conscious enterprises.

Use Non-Sensitive or Synthetic Data

A key strategy for mitigating AI privacy issues involves using non-sensitive or synthetic data. This approach is crucial when dealing with AI and privacy, as it circumvents the risks associated with the handling of sensitive customer data.

For projects that require sensitive data, exploring safe alternatives like digital twins or data anonymization can help address privacy and security issues in AI.

Employing synthetic data is particularly effective in maintaining AI privacy while still benefiting from AI’s capabilities.

Encourage Use of Data Governance and Security Tools

To further enhance AI privacy in businesses, it’s important to deploy data governance and security tools.

These tools are instrumental in protecting against privacy concerns, offering solutions like XDR, DLP, and Threat Intelligence. They help in managing and safeguarding data, a critical aspect of the landscape of security issues in AI.

Such tools ensure compliance with regulations and help maintain the integrity of AI systems, addressing overarching privacy issues.

Carefully Read Documentation of AI Technologies

In addressing AI and privacy issues, it’s imperative for businesses to thoroughly understand the AI technologies they use. This involves carefully reading the vendor documentation to grasp how AI tools operate and the principles guiding their development.

This step is crucial in recognizing and mitigating potential privacy issues and is part of ethical practices in handling AI and privacy. Seeking clarification on any ambiguous aspects can further help businesses navigate the complexities of any privacy concerns.

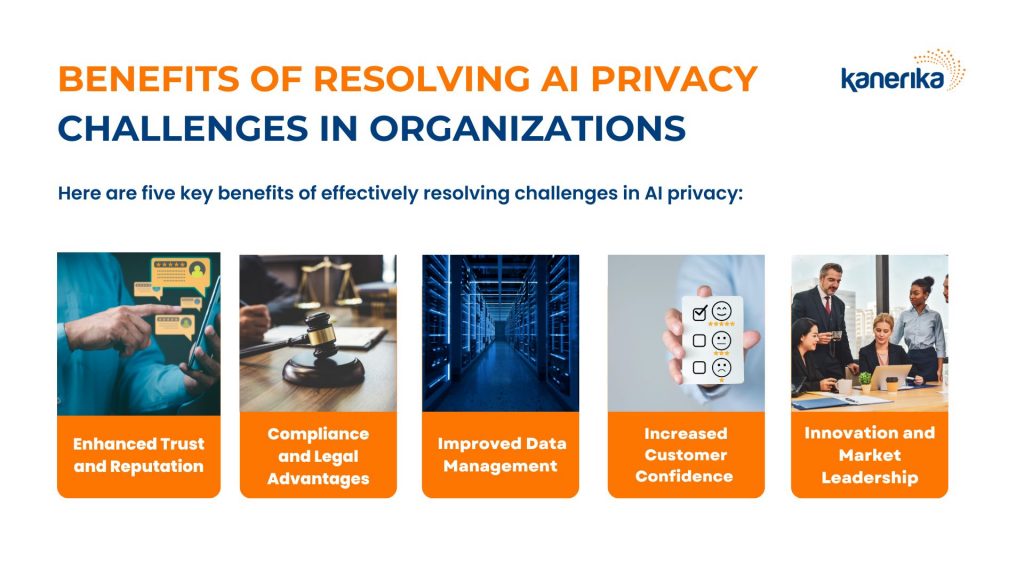

Benefits of Addressing AI and Privacy Issues

Navigating AI and privacy issues is more than meeting regulatory demands; it’s a strategic choice that offers multiple benefits to organizations. Here are five key advantages of effectively managing AI privacy concerns:

Enhanced Trust and Reputation in the Realm of AI and Privacy

Proactively tackling AI and privacy issues significantly bolsters a business’s reputation and builds trust with customers, partners, and stakeholders. In an age where data breaches are prevalent, a robust stance on privacy sets a company apart. This trust is crucial, particularly in sectors like healthcare or finance, where sensitivity to AI privacy issues is high.

Compliance and Legal Advantage Amidst AI Privacy Concerns

Effectively resolving AI privacy risks ensures adherence to global data protection laws like GDPR and HIPAA. This proactive approach not only prevents legal repercussions but also establishes the company as a responsible handler of AI technologies, offering a competitive edge in environments where regulatory compliance is critical.

Improved Data Management Addressing AI Privacy Issues

Confronting AI privacy risks leads to better data management practices. It requires an in-depth understanding of data collection, utilization, and storage, aligning with the ethical issues AI present. This strategy results in more efficient data management, minimizing data storage costs and streamlining analysis, crucial in mitigating privacy and security issues in AI.

Increased Customer Confidence Tackling Privacy and AI:

When companies show commitment to privacy, customers feel more secure engaging with their services. Addressing AI and privacy concerns enhances customer trust and loyalty, which is crucial in a landscape where AI technologies are evolving every now and then. This confidence can drive business growth and improve customer retention.

Innovation and Market Leadership in AI Privacy Solutions

Resolving AI and privacy issues in companies paves the way for innovation, especially in developing privacy-preserving AI technologies. Leading in this space opens new opportunities, particularly as the demand for ethical and responsible AI solutions grows. This leadership attracts talent keen on advancing ethical issues in AI and privacy.

In sum, addressing AI and privacy risks isn’t just about avoiding negatives; it’s an opportunity to strengthen trust, ensure compliance, enhance data management, boost customer confidence, and lead innovation. These benefits are essential in navigating the complex landscape of big data and AI.

Kanerika: The Leading AI Implementation Partner in USA

As discussed throughout the article, today’s businesses need to balance AI innovation and privacy concerns. The best way to implement that is by working with a trusted AI implementation partner who has the technical expertise to monitor and comply with regulations and ensure data privacy.

Kanerika stands out as a leading AI implementation partner in the USA, offering an unmatched blend of innovation, expertise, and compliance.

This unique positioning makes Kanerika an ideal choice for businesses looking to navigate the complexities of AI implementation while ensuring strict adherence to privacy and compliance standards.

Recognizing the unique challenges and opportunities in AI, Kanerika begins each partnership with a comprehensive pre-assessment of your specific needs. Kanerika’s team of experts conducts an in-depth analysis, crafting tailored recommendations that align with the nuances of your business, which is particularly critical for sectors such as healthcare where data sensitivity is paramount.

Embarking on a journey with Kanerika means taking a significant step towards developing an AI solution that precisely fits your business needs.

Sign up for a free consultation today!

FAQs

What are the privacy concerns of using AI?

Privacy concerns of using AI include the potential for data breaches, unauthorized use or misuse of personal data, and lack of transparency in data collection and usage. AI systems, especially those involving large datasets, can inadvertently expose sensitive information or be used to infer private details about individuals.

What are the risks of generative AI and privacy?

Generative AI poses risks such as the creation of deepfakes, misuse of personal data to generate realistic but fake content, and the potential of AI models to inadvertently learn and reproduce private information. This can lead to breaches of confidentiality, identity theft, and spread of misinformation.

How do you fix privacy issues in AI?

Fixing privacy issues in AI involves establishing clear data governance policies, using non-sensitive or synthetic data for training AI models, implementing robust security measures, and ensuring compliance with privacy laws. Regular audits and updates to AI systems can also help in addressing evolving privacy challenges.

What are the legal issues surrounding AI?

Legal issues surrounding AI include compliance with data protection laws like GDPR, intellectual property rights challenges, liability in case of AI-induced damages or errors, and ethical concerns such as bias and discrimination. Navigating these legal complexities requires a thorough understanding of both AI technology and the legal landscape.

Is AI good for privacy?

AI can be both beneficial and challenging for privacy. While AI technologies can enhance privacy protections through improved security measures and fraud detection, they can also pose threats to privacy if not managed correctly. The impact of AI on privacy largely depends on its application and the measures taken to safeguard data.

What is an example of AI and privacy?

An example of AI and privacy is the use of AI-driven facial recognition technology. While it can enhance security and authentication processes, it raises privacy concerns due to the potential for surveillance and tracking without consent.

What is privacy preserving AI?

Privacy preserving AI refers to AI systems designed to protect users' privacy. Techniques like differential privacy, federated learning, and homomorphic encryption are used to ensure that AI can learn from data without compromising the privacy of the individuals whose data is being analyzed.

What is private artificial intelligence?

Private artificial intelligence refers to AI systems that prioritize the confidentiality and privacy of the data they handle. This involves using AI in a way that respects user privacy, often by employing techniques that minimize or eliminate the exposure of sensitive data.

How does AI affect our everyday lives?

AI affects our everyday lives in numerous ways, from personalized recommendations on streaming services and online shopping to voice assistants, smart home devices, and navigation systems. It enhances user experience and efficiency but also raises concerns about privacy, data security, and dependency on technology.

What is an example of privacy?

An example of privacy is the confidentiality of personal health records. This includes the right of individuals to expect their health information to be kept private and only shared with authorized persons or entities, in accordance with privacy laws like HIPAA.