In this era of data-driven innovation, data pipelines have become an indispensable tool for forward-thinking companies. Their importance cannot be overstated. However, traditional data pipelines have been gradually replaced with the latest advancements in data pipelines automation.

The reason? As data pipelines get more complex, there is a significant increase in the number of data related tasks that would take a lot of time and resources to process manually. Through automation, businesses can save time and resources by delegating a vast majority of tasks to AI-driven automated flows. It also provides an abstraction layer to tap into the capabilities of robust data platforms such as Snowflake.

Let us delve into this new aspect of data pipeline management.

What is Data Pipeline Automation?

Data pipeline automation is the process of automating the movement of data from one system to another.

The goal is to reduce the amount of human supervision required to keep data flowing smoothly.

Here is a simple example that a layperson can relate to.

In earlier versions of Windows, antivirus software had to be executed manually. Now, most users do not even know that Microsoft Defender is running in the background.

It is the same with data pipeline automation.

Why is Data Pipeline Automation Necessary?

Data pipeline automation is necessary due to the vast amount of data that is generated.

For the last few decades, there has been an increased acceptance of software to assist business processes. Software is used to manage sales, accounting, customer relationships and services, the workforce, and other aspects.

A useful byproduct is the generation of copious amounts of data.

Additionally, data pipeline optimization facilitates the seamless movement, transformation, and value enhancement of data.

Benefits of Data Pipeline Automation

Data pipelines act as catalysts that bridge the gap between data generation and utilization. Automation makes it more efficient and less prone to errors.

Data pipeline automation can offer several benefits for your business, such as:

- Maximizing returns on your data through advanced analytics and better customer insights.

- Identifying and monetizing “dark data” with improved data utilization.

- Improving organizational decision-making on your way to establishing a data-driven company culture.

- Providing easy access to data with improved mobility.

- Giving easier access to cloud-based infrastructure and data pipelines.

- Increasing efficiency by streamlining the data integration process and reducing the time and effort required to move and transform data.

- Improving accuracy by reducing the risk of human error and ensuring data quality and consistency.

- Lowering costs by eliminating manual oversight, reducing maintenance overhead, and optimizing resource utilization.

Watch on-demand- MS Copilot & Enterprise Productivity: Everything You Need to Know

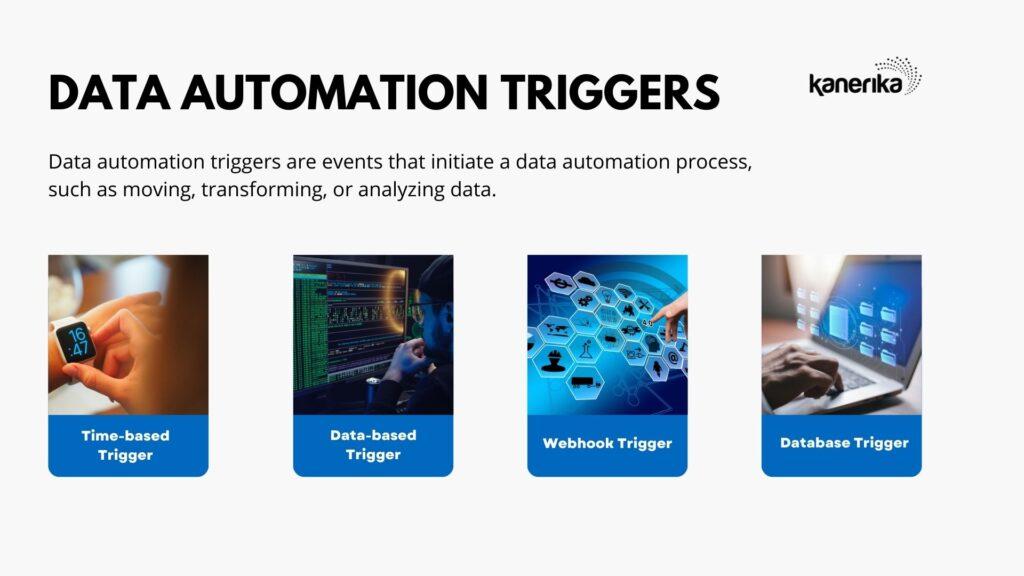

Types of Data Automation Triggers

Data automation triggers are events that initiate a data automation process, such as moving, transforming, or analyzing data. Data automation triggers can be based on various criteria, such as:

Time

The data automation process runs on a predefined schedule, such as daily, weekly, or monthly. For example, you can use a time-based trigger to send a weekly sales report to your manager.

Data

The data automation process runs when a specific data condition is met. These can be a change in a field value, a new record added, or a threshold reached. For example, you can use a data-based trigger to send an alert when an inventory level falls below a certain value.

Webhook

The data automation process runs when an external service sends an HTTP request to a specified URL. For example, you can use a webhook trigger to update a customer record when they fill out a form on your website.

Database

The data automation process runs when a specific operation is performed on an SQL or Oracle database. These operations include inserting, updating, or deleting data. For example, you can use a database trigger to audit the changes made to a table.

Best Practices for Data Pipeline Automation

Like most new technologies, it could seem difficult to implement a data pipeline automation tool. Keep these basic principles in mind when introducing such a change.

Use a modular approach

Data pipelines are complex. You can choose not to automate data orchestration and data transformation in one attempt.

Break it down and implement it in phases. This makes it easier to understand and troubleshoot the pipeline automation.

Go slow

There is no need to do it all in a month or even six months. Every time you increase automation, evaluate the system and if it can truly work unassisted.

After all, it is meaningless if an automated system that is meant to cut down on manpower needs supervisors.

Data quality assurance

Validate data at each stage, perform data profiling, and conduct regular audits. Establish data quality metrics and monitor them continuously to rectify any issues promptly.

Automation monitoring

Establish comprehensive monitoring and alerting systems to keep track of pipeline performance. Monitor data flow, processing times, and any anomalies or errors.

Testing and validation

Establish a rigorous testing and validation process for data pipeline automation. Test various scenarios, including edge cases, to ensure the accuracy and reliability of the pipeline.

Continuous innovation

Treat data pipeline automation as an iterative process. Regularly review and assess the performance and efficiency of your pipelines.

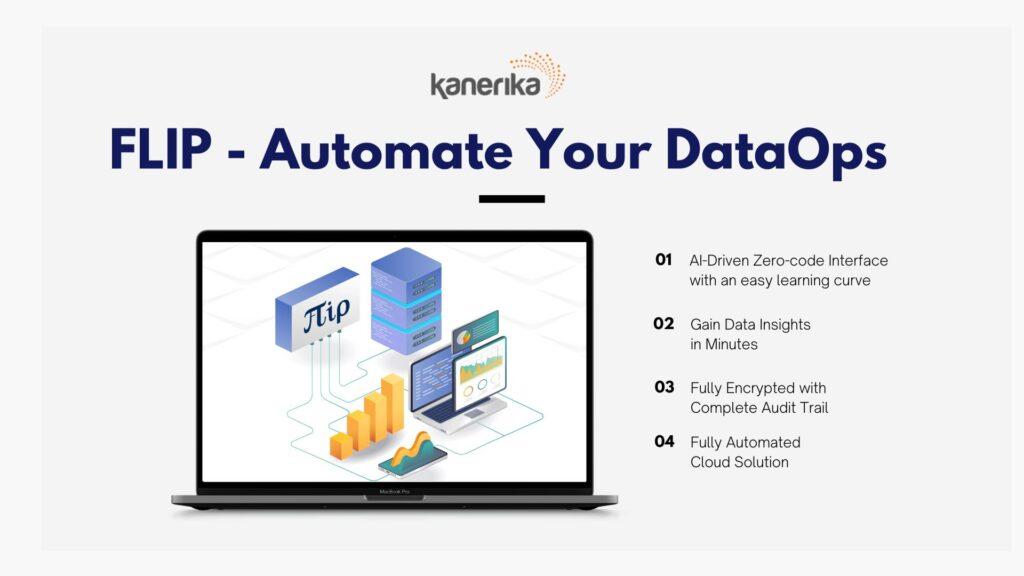

Revolutionize Data Pipeline Automation with FLIP

Do your developers spend endless hours manually managing your data pipelines? Look no further, because FLIP is here to transform your data experience!

FLIP is a DataOps Automation tool designed by Kanerika to automate your data transformation effortlessly. The principal objective is to ensure smooth and efficient data flow to all stakeholders.

Not only is FLIP user-friendly, but it also offers unbeatable value for your investment.

Here’s what FLIP brings to the table:

- Automation: Say goodbye to manual processes and let FLIP handle the heavy lifting. It automates the entire data transformation process, freeing up your time and resources for more critical tasks.

- Zero-Code Configuration: No coding skills? No problem! FLIP’s intuitive interface allows anyone to configure and customize their data pipelines effortlessly, eliminating the need for complex programming.

- Seamless Integration: FLIP seamlessly integrates with your existing tools and systems. Our product ensures a smooth transition and minimal disruption to your current workflow.

- Advanced Monitoring and Alerting: FLIP provides real-time monitoring of your data transformation. You get real-time insights. Stay in control and never miss a beat.

- Scalability: As your data requirements grow, FLIP grows with you. It is designed to handle large-scale data pipelines, accommodating your expanding business needs without compromising performance.

To experience FLIP, sign up for a free account today!

FAQ

Can Data Pipeline Automation work with cloud-based infrastructure and data pipelines?

Yes, Data Pipeline Automation can seamlessly integrate with cloud-based infrastructure and data pipelines, providing easier access and management of data stored in the cloud.

How does Data Pipeline Automation enhance data quality and consistency while reducing the risk of human error?

Automation ensures data quality and consistency by following predefined rules and processes, reducing the risk of human error in data handling and transformation.

Why is testing and validation significant in Data Pipeline Automation, and what should be tested?

esting and validation ensure the accuracy and reliability of data pipeline automation. Various scenarios, including edge cases, should be tested to validate the pipeline's performance and functionality.

How important is data quality assurance in Data Pipeline Automation, and what steps should be taken to ensure it?

Data quality assurance is crucial. It involves validating data at each stage, performing data profiling, conducting regular audits, and monitoring data quality metrics to promptly address any issues.

How can a modular approach benefit the implementation of Data Pipeline Automation?

Using a modular approach allows for a phased implementation of data automation, making it easier to understand and troubleshoot. It breaks down complex pipelines into manageable phases.

What role does automation monitoring play in Data Pipeline Automation, and what aspects should be monitored?

Automation monitoring involves tracking pipeline performance, data flow, processing times, and detecting anomalies or errors. Comprehensive monitoring and alerting systems are essential for ensuring smooth operations.

How scalable is FLIP in handling data pipelines, especially as business data requirements grow?

FLIP is highly scalable and designed to handle large-scale data pipelines. It can accommodate expanding business needs without compromising performance, ensuring data pipeline scalability.

What are the key benefits of using FLIP for Data Pipeline Automation?

FLIP offers several benefits, including automation of data transformation, zero-code configuration, seamless integration with existing systems, advanced monitoring and alerting, and scalability to handle growing data requirements.

Follow us on LinkedIn and Twitter for insightful industry news, business updates and all the latest data trends online.